Clinical Trial vs Real-World Effectiveness Estimator

How This Tool Works

Based on research showing that clinical trial results often don't reflect real-world outcomes, this tool estimates how effective a treatment might be for you. Clinical trials typically include healthier, younger patients with fewer complications. This estimator adjusts for factors like age, comorbidities, and medication use to show what might happen in your specific situation.

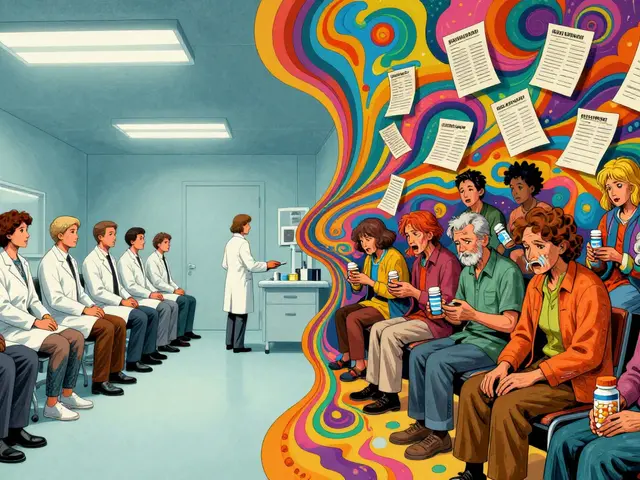

When a new drug hits the market, you hear about its success in clinical trials. But how well does it actually work for the average person? That’s where clinical trial data and real-world outcomes start to tell very different stories.

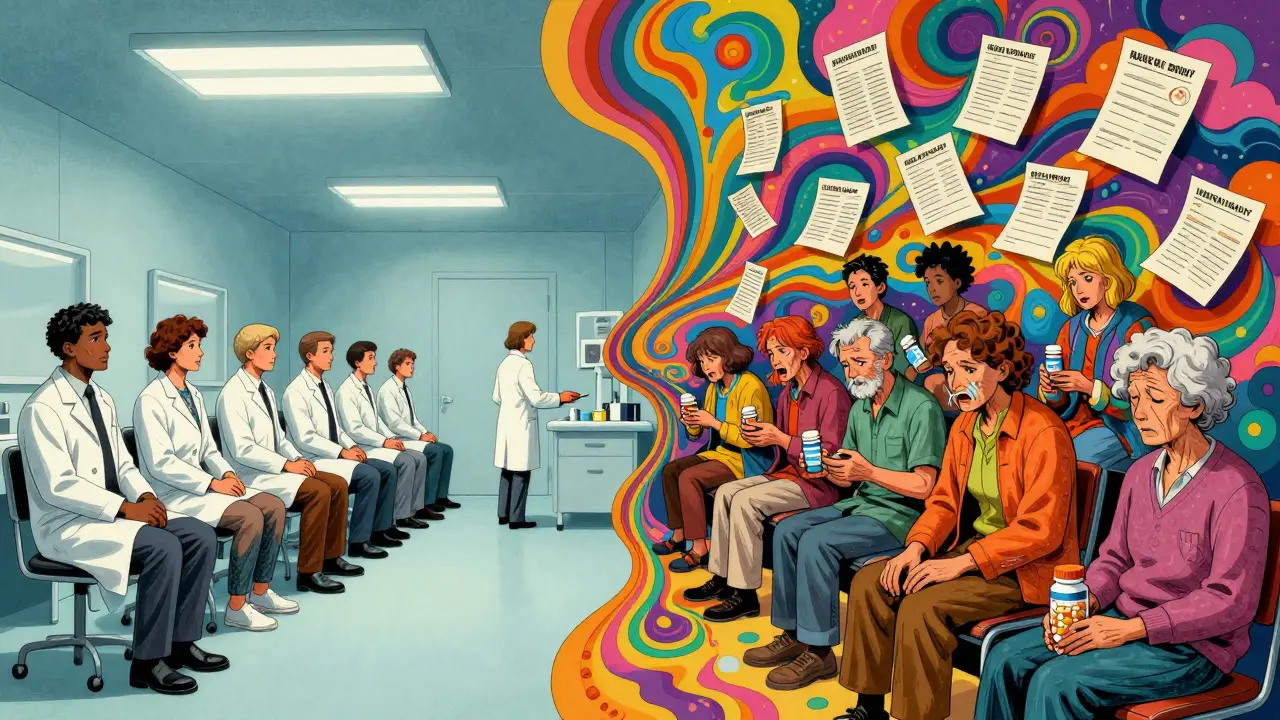

Why Clinical Trials Don’t Reflect Real Life

Clinical trials are designed to answer one clear question: Does this treatment work under perfect conditions? To get that answer, researchers control everything-age, other health conditions, lifestyle, even how often patients show up for checkups. But that perfection comes at a cost. About 80% of people who could benefit from a new drug are excluded from trials because they have other illnesses, are over 75, or don’t fit the narrow profile researchers need. A 2023 study in the New England Journal of Medicine found that only 1 in 5 cancer patients seen at major hospitals would qualify for a typical trial. And Black patients were 30% more likely to be turned away-not because their disease was worse, but because of barriers like transportation, work schedules, or lack of access to specialized centers. That means the data showing a drug works so well? It’s based on a group that’s healthier, younger, and more privileged than most patients in real clinics.What Real-World Data Actually Shows

Real-world outcomes come from everyday practice: electronic health records, insurance claims, wearable devices, and patient registries. This data includes people with diabetes and heart failure, older adults on five different medications, and those who miss appointments or forget pills. It’s messy. But it’s honest. Take diabetic kidney disease. A 2024 study compared data from clinical trials with real-world records from over 23,000 patients. The trial group had 92% complete data for key outcomes. The real-world group? Only 68%. Why? Because in real life, not every blood test gets done. Not every visit happens on schedule. And sometimes, patients stop taking the drug because of side effects or cost. Real-world data doesn’t just show how well a drug works-it shows how often it fails. It reveals that a medication that lowered blood pressure by 20 points in a trial might only drop it by 8 points in a 72-year-old with kidney disease and depression. That’s not a failure of the drug. It’s a failure of the trial to reflect reality.The Hidden Costs of Clinical Trials

Running a Phase III clinical trial costs an average of $19 million and takes two to three years. That’s money spent on strict protocols, specialized staff, frequent lab tests, and monitoring. Meanwhile, real-world evidence studies can be done in six to twelve months for 60-75% less. They use data already being collected-no extra visits, no extra tests. That’s why payers like UnitedHealthcare and Cigna now demand real-world evidence before covering expensive new drugs. They don’t care if it works in a controlled study. They care if it works for the 65-year-old with three chronic conditions who can’t afford to miss work for weekly infusions. And it’s not just cost. Real-world data helps fix clinical trials too. Companies like ObvioHealth now use real-world patterns to pick better participants for trials-people more likely to stick with the treatment, or those whose medical history suggests they’ll respond. This can shrink trial sizes by 15-25% without losing accuracy. That means faster approvals and lower costs for everyone.

Why Real-World Data Isn’t Perfect Either

Real-world data sounds like the answer, but it’s not magic. It’s full of gaps. A patient might get a new prescription, but if they don’t refill it, that’s not always recorded. A wearable might stop syncing data after a few weeks. Insurance claims don’t capture side effects unless the patient goes to the ER. And then there’s bias. If a drug is only prescribed to wealthier patients who can afford copays, the real-world data will look better than it should. If a hospital system doesn’t use the same electronic records as another, combining data becomes a nightmare. A 2023 study in Nature Communications found that 63% of attempts to merge trial data with real-world data failed because the systems didn’t speak the same language. Experts like Dr. John Ioannidis from Stanford warn that the rush to use real-world data has outpaced the science. Some studies have claimed a drug works when trials showed it didn’t-because they didn’t account for hidden factors like income, education, or access to nutrition.Regulators Are Catching Up

The FDA didn’t always trust real-world data. But since the 21st Century Cures Act in 2016, things have changed. Between 2019 and 2022, the FDA approved 17 drugs using real-world evidence-up from just one in 2015. The European Medicines Agency is even further ahead, using RWE in 42% of post-market safety studies in 2022. The FDA now requires drug makers to submit data quality reports along with real-world evidence. They’re asking: How was the data collected? Who was included? Were missing values handled properly? These aren’t just bureaucratic steps-they’re the difference between useful insights and misleading noise. The NIH’s HEAL Initiative, launched in 2021 with $1.5 billion, is using real-world data to find alternatives to opioids for chronic pain. Flatiron Health, which collects EHR data from 2.5 million cancer patients, was bought by Roche for $1.9 billion-not because it’s a clinic, but because it turned messy data into actionable insights.

The Future Is Hybrid

The real story isn’t “clinical trials vs real-world data.” It’s “clinical trials and real-world data.” Trials tell us: Can this work? Real-world data tells us: Does it work for people like my mom? New hybrid trial designs are emerging-where patients start in a controlled trial, then continue treatment in their normal lives, with data collected through apps and wearables. The FDA’s 2024 draft guidance supports this. AI is helping too. Google Health’s 2023 study showed AI could predict treatment outcomes from EHR data with 82% accuracy-better than traditional trial analysis. But none of this works without quality. The 2022 VALID Health Data Act, passed by the U.S. Senate, is trying to set standards for collecting and using real-world data. Why? Because a 2019 Nature study found only 39% of RWE studies could be replicated. If you can’t check the results, you can’t trust them.What This Means for Patients

If you’re considering a new treatment, don’t just look at the trial results. Ask: Who was in that study? Were they like me? Was the drug tested on someone my age with my other conditions? And if it’s already on the market, ask your doctor: Has this worked for other patients like me? Real-world outcomes aren’t replacing clinical trials. They’re completing them. The gold standard isn’t one or the other-it’s both.Why do clinical trials exclude so many patients?

Clinical trials exclude patients to reduce variables that could mess up the results. They want to see if the drug works under ideal conditions, so they pick healthier, younger, and more compliant participants. But that means the results don’t reflect most real patients, especially older adults, people with multiple chronic conditions, or those from underserved communities.

Can real-world data replace clinical trials?

No. Real-world data can’t replace clinical trials for proving a drug works in the first place. Trials provide the cleanest evidence of safety and efficacy. But real-world data shows how well the drug works in everyday life-something trials can’t capture. The best approach uses both: trials for approval, real-world data for ongoing use.

Is real-world data reliable?

It can be, but only if the data is high quality and analyzed correctly. Real-world data has gaps, biases, and inconsistencies. Studies that don’t account for confounding factors-like income, access to care, or medication adherence-can give misleading results. The key is using statistical methods like propensity score matching and ensuring data comes from trusted sources like large EHR systems or validated registries.

Why are payers using real-world evidence?

Payers like UnitedHealthcare and Cigna use real-world evidence because they pay for the outcomes. If a drug costs $100,000 a year but only works for a small group of healthy patients, it’s not worth it. Real-world data shows whether it works for the broader population-including those with other conditions-and whether it reduces hospital visits or emergency care. That’s what determines if it gets covered.

What’s the biggest challenge with real-world data?

The biggest challenge is fragmentation. In the U.S., there are over 900 different health systems, each using different electronic records that don’t talk to each other. Data is siloed, incomplete, and often inconsistent. Plus, privacy laws like HIPAA make sharing hard. Without standardized formats and better data linkage, real-world evidence will keep being slow and unreliable.

How is AI helping with real-world data?

AI is making sense of messy data. Algorithms can spot patterns in electronic health records that humans miss-like which patients are likely to stop a drug, or which combinations of conditions predict side effects. Google Health’s 2023 study showed AI could predict treatment outcomes from EHR data with 82% accuracy, outperforming traditional trial analysis. But AI needs good data to work. Garbage in, garbage out.

i just had my grandma start this new heart med and she forgot to take it half the time cause she gets confused and the drs act like that's her fault

but the trial said 95% adherence?? lol no thanks

as someone who grew up in a rural town where the nearest specialist was 2 hours away, i can't believe we still treat clinical trials like gospel. my cousin with diabetes was denied a trial because she had high blood pressure-and she was 68, worked two jobs, and drove a 1998 pickup. the system isn't broken, it's designed this way.

bruh 🤡 they exclude people like me just because i have hypertension AND depression AND i don't have a private jet to get to the trial site? this ain't science, this is elitism with a lab coat. the drug works? sure. for who? the 1% who can afford to be perfect.

i... i think this is so important... but also... so overwhelming? like, how do we even fix this? the data is messy, the systems don't talk, and patients are just... tired? i just want to know if something will help my dad without him having to become a full-time medical researcher.

Let me be perfectly clear: the pharmaceutical industry has turned clinical trials into a marketing exercise disguised as science. They cherry-pick participants to manufacture success, then sell the results as if they apply to everyone. This is not innovation. This is fraud. And the FDA’s recent endorsement of real-world evidence is merely damage control, not reform.

Trials show can it work. Real data shows does it work. Both matter.

in india, we don't even get trials most times. we just get the drugs after they're sold in the us. and then we find out they don't work for us because we're not the 'ideal' patient. the system doesn't care if we live or die, just if the profit margin is good.

ohhh so now we're supposed to trust data from people who can't even take their own pills? wow. brilliant. next they'll say we should trust tiktok influencers for medical advice. real world data is just trash wrapped in fancy graphs. stop pretending it's science.

There’s a reason the FDA is moving toward hybrid trials. It’s not about replacing clinical data-it’s about augmenting it. Real-world evidence isn’t perfect, but it’s the closest thing we have to the truth outside the bubble.

it’s not about choosing between trials and real-world data-it’s about listening. listening to the person who missed their appointment because they were working two shifts. listening to the elderly woman who stopped the pill because it made her dizzy and she couldn’t afford the copay. listening to the kid in a rural clinic who’s never seen a specialist. the science isn’t in the numbers-it’s in the stories behind them.

my sister’s oncologist told her she didn’t qualify for a trial because she had ‘too many comorbidities.’ she’s 54, has type 2 diabetes, and works as a teacher. turns out, she’s exactly the kind of person who needs this drug. but according to the trial? she’s noise.

so the trial said 92% success... real world says 68%? congrats, we just proved that 24% of people are basically just bad at being patients. 🙃

While the limitations of clinical trial populations are well documented, the adoption of real-world evidence must be approached with rigorous methodological standards. Without robust data governance, the risk of confounding bias and ecological fallacy increases substantially. Regulatory agencies are beginning to implement frameworks, but standardization remains a critical gap.